OpenShift 설치

SSH Issue

Files for installation

Installation Steps:

Firstly, get the packages tar file

scp .\pre-installation.tar root@10.1.10.230:/root/

(download the pre-installation.tar file from the notion)

Then we will get the following files

ls /root 01-packages.sh 03-check-rootpermit.sh 05-ansible-directory.sh anaconda-ks.cfg 02-vim-config.sh 04-ansible-config.sh 06-ssh-key.sh pre-installation.tar

run all the above scripts first

Then we will get ansible installed. We need to move all of the ansible files inside the new ‘ansible’ directory that we will create manually

mv group_vars playbook roles ansible.cfg inventory ansible

We need to include the following in the /etc/hosts of bastion VM

<bastion-IP> api.ocp.spelix2.com

<bastion-IP> api-ocp-spelix2-com

Then we need the ocp.yaml file under,

vim ansible/playbook/ocp.yaml

- name: Install HAProxy

hosts: localhost

roles:

- { role: install-haproxy, tags: ['haproxy'] }

vars_files:

- ../group_vars/all.yaml

- name: Install DNS

hosts: localhost

roles:

- { role: install-dns, tags: ['dns'] }

vars_files:

- ../group_vars/all.yaml

- name: Install TFTPBOOT

hosts: localhost

roles:

- { role: install-tftpboot, tags: ['tftp'] }

vars_files:

- ../group_vars/all.yaml

- name: Install DHCP

hosts: localhost

roles:

- { role: install-dhcp, tags: ['dhcp'] }

vars_files:

- ../group_vars/all.yaml

- name: Install web server (httpd)

hosts: localhost

roles:

- { role: install-httpd, tags: ['httpd'] }

vars_files:

- ../group_vars/all.yaml

- name: Configuration Firewall (if you have two networks, it must be done)

hosts: localhost

roles:

- { role: config-firewall, tags: ['firewall'] }

vars_files:

- ../group_vars/all.yaml

- name: Download installation files

hosts: localhost

roles:

- { role: download-files, tags: ['download'] }

vars_files:

- ../group_vars/all.yaml

- name: Create OCP Directory

hosts: localhost

roles:

- { role: create-ocp-directory, tags: ['ocp-dir'] }

vars_files:

- ../group_vars/all.yaml

- name: Create OCP install-config.yaml

hosts: localhost

roles:

- { role: create-ocp-config, tags: ['ocp-config'] }

vars_files:

- ../group_vars/all.yaml

Then we need to include all.yaml

vim ansible/group_vars/all.yaml

---

client_files_url: "<https://mirror.openshift.com/pub/openshift-v4/clients/ocp/4.10.37>"

installation_files_url: "<https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.10/4.10.37>"

ocp_client_files:

- openshift-client-linux.tar.gz

- openshift-install-linux.tar.gz

rhcos_binaries:

- rhcos-live-initramfs.x86_64.img

- rhcos-live-kernel-x86_64

- rhcos-live-rootfs.x86_64.img

ipconfig: "dhcp"

ppc64le: false

uefi: true

disk: sda #disk where you are installing RHCOS on the masters/workers

networkname:

external: "ens192"

internal: "ens224"

ocp:

root_dir: "/root"

network: "OVNKubernetes" # openshift network OpenShiftSDN/OVNKubernetes

sshkey: '' # create ssh key: ssh-keygen -t rsa -b 4096 -N ''

secret: ''

# openshift pull secret file: <https://console.redhat.com/openshift/install/metal/user-provisioned>

helper:

name: "bastion" #hostname for your helper node

ipaddr: "192.168.222.1" #current IP address of the helper

networkifacename: "ens224" #interface of the helper node,ACTUAL name of the interface, NOT the NetworkManager name

dns:

domain: "spelix.com" #DNS server domain. Should match baseDomain inside the install-config.yaml file.

clusterid: "ocp" #needs to match what you will for metadata.name inside the install-config.yaml file

forwarder1: "192.168.222.1" #DNS forwarder

forwarder2: "8.8.8.8" #second DNS forwarder

lb_ipaddr: "{{ helper.ipaddr }}" #Load balancer IP, it is optional, the default value is helper.ipaddr

dhcp:

router: "192.168.222.1" #default gateway of the network assigned to the masters/workers

bcast: "192.168.222.255" #broadcast address for your network

netmask: "255.255.255.0" #netmask that gets assigned to your masters/workers

poolstart: "192.168.222.200" #First address in your dhcp address pool

poolend: "192.168.222.220" #Last address in your dhcp address pool

ipid: "192.168.222.0" #ip network id for the range

netmaskid: "255.255.255.0" #networkmask id for the range.

ntp: "192.168.222.1" #ntp server address

dns: "" #domain name server, it is optional, the default value is set to helper.ipaddr

bootstrap:

name: "bootstrap" #hostname (WITHOUT the fqdn) of the bootstrap node

ipaddr: "192.168.222.200" #IP address that you want set for bootstrap node

macaddr: "00:50:56:bd:a4:01" #The mac address for dhcp reservation

masters:

- name: "master01" #hostname (WITHOUT the fqdn) of the master node (x of 3)

ipaddr: "192.168.222.201" #The IP address (x of 3) that you want set

macaddr: "00:50:56:bd:29:94" #The mac address for dhcp reservation

- name: "master02"

ipaddr: "192.168.222.202"

macaddr: "00:50:56:bd:bb:64"

- name: "master03"

ipaddr: "192.168.222.203"

macaddr: "00:50:56:bd:37:59"

workers:

- name: "worker01" #hostname (WITHOUT the fqdn) of the worker node you want to set

ipaddr: "192.168.222.211" #The IP address that you want set (1st node)

macaddr: "00:50:56:bd:28:fb" #The mac address for dhcp reservation (1st node)

- name: "worker02"

ipaddr: "192.168.222.212"

macaddr: "00:50:56:bd:a1:1a"

- name: "worker03"

ipaddr: "192.168.222.213"

macaddr: "00:50:56:bd:b2:ea"

## My file for number 10 practice cluster

---

client_files_url: "<https://mirror.openshift.com/pub/openshift-v4/clients/ocp/4.10.37>"

installation_files_url: "<https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.10/4.10.37>"

ocp_client_files:

- openshift-client-linux.tar.gz

- openshift-install-linux.tar.gz

rhcos_binaries:

- rhcos-live-initramfs.x86_64.img

- rhcos-live-kernel-x86_64

- rhcos-live-rootfs.x86_64.img

ipconfig: "dhcp"

ppc64le: false

uefi: true

disk: sda #disk where you are installing RHCOS on the masters/workers

networkname:

external: "ens192"

internal: "ens224"

ocp:

root_dir: "/root"

network: "OVNKubernetes" # openshift network OpenShiftSDN/OVNKubernetes

sshkey: 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDMkx7b+ZEpfQhWs0SrCeUXiR8d00ST+f43tEpaLtNVOSE+GwlwmbuaOPqOBM4Efjw8BxiZQ2JX506t6W7zDZ4UK2XbpkjnLbYJxAy4gPK7/ZVr6MI6Fn7zwhOFC2sB31bb9RtfYcNeXQ4tCHdNy/DZsIrE/rEH3CrCzYiQspKHYL5ZvNiQo/9eu7cJ/MeZTB+TvkFV9m/GabQtr72q2FdnLdic0B3a4tM42M2WVbMUlP5zFyr8SeDLrTOOP6nygqlViqfvF8KUIJkNNqwvIkchaepZ82FVnnrpM81o3n3UohhRRnuHe/LAOiGbQDrXgxWGRan9xdS3i0IV4hwtxKoc/d+a68AgyBQ/j5jANUWtQ2z5cls4nJo4g7d+h4UZ+MOVQvrN+QHJMq2Nb1QmfPi8OAaFIfUldFwcgoDeZ8A0UllUVmYE1jcehbCSS9VqCX5wr9jT8oVzf8E7iN2WEbh/nwxX4OGjYQwZo+iXegE7HG44WCaFtYugz5MQjVcxvya4X3kpUOztK89GK5R4LYO9yekBjQcopcSUS6MEj6zbZcb5+yiO/ovelZTCdi/W2THsvQHmeibhb1W896oSTLG8xDbKP7z/V4LJTvBd1DuQ17VO2UaFcUIZirmggTvV0c+HQtDZdKWujaIk94esirm2MCJQykPATXxvjiLSG8rfYw== root@bastion' # create ssh key: ssh-keygen -t rsa -b 4096 -N ''

secret: '{"auths":{"cloud.openshift.com":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfMGYzMmNjZjRmMjI5NGFjNWEzOTU4ZWJiZjM5Y2VjZWQ6Q0dVUjRKVTRaNUNRMUtIRVJEVlA2VDFOTFBKM1lRMFJISUdaREw5UzBENDhaMDgxUVoxQ0ZQUlhRRkpSNDlKTw==","email":"wajiwos16@gmail.com"},"quay.io":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfMGYzMmNjZjRmMjI5NGFjNWEzOTU4ZWJiZjM5Y2VjZWQ6Q0dVUjRKVTRaNUNRMUtIRVJEVlA2VDFOTFBKM1lRMFJISUdaREw5UzBENDhaMDgxUVoxQ0ZQUlhRRkpSNDlKTw==","email":"wajiwos16@gmail.com"},"registry.connect.redhat.com":{"auth":"fHVoYy1wb29sLTZiN2U1YjE4LWViMDItNDhkYy1hZTY1LWU1ZDFhMGI0ZGI3MTpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXdZelU0TldJMFpqQm1NekUwTjJZME9HVTVNV0U1T1RFMllUSXdOelk0WlNKOS52Q01sTnlXNVpyMjBiNXRyTm1UazNDQmZlTnZ3SERuQXh2SVNUSDJnTm1wT3pOSUppa29RUnFJMjZtcFF0QzktelFXSUVyZkV0clM0ZmgxTnBPVm5nRGEtTnltT3V0RG5FU3VUOXlJZ3B2VDRxMFFBVWhFdGdTei1aMk9oN3BXOVZzbWpxUjJwb2lJNzZhMUFjc1p2X3lHVDZaOXE5Um1HWGRpV0VFaG5WUUl2VmxvbDRxQS14aDJjOE9LMkc2LVR6VnNJSndzc09kVndjcWItX2JhRm5pV1JsS2lZNFpFQ3NCUjlJMmZHR3ZaSnBET3ZCcndlSEtuUFlwTS10WmtkTXVkeV9ZSWlxZ2ctbUg3ajR0dXhHRTdPck00bERjZk55R1BERGM4OHdZZExzMTNxS3NleVNuVmxmMjFRMFM0RDRiRWl0OUtCalZsR0p6alJ5YTFOSThLYk8za2hkdjhIT3hGLVZ1cXRocUJkV0NYcTVDYXF3ZlZ6T2ZPTnB1Zlc1VjJ0VmtwbFlFWmlhejczSjdpeEc2dHBrNnQyWWYtYmUzSjNRdHA0Q1pyX0hOVmkzZVBGRHMzX3JpbnA0UUs3LV9KWG16cHdkUGtLLWZYZ3UwSFZwZmlGM3ZYaTNmR204Q2t6MXBWUlpjR1dveTYzUmxZd210ZTAwZjlVT280RV9Tb1BpZFFReERGUDVtUWF3RDc5ZU53eDVKd1hDYWNVTXNMeFFNNGpSYjNiT0lqUXY5amo1QUNpQzJYSENRZDZKZzJhekxQWWNlWEJpRnRISEhwY2Q4Yl9zRkt0d1lZRjM4UGN6cFJFNzdodGp6NExEdGwzRFdmS0p6dnRPU0dIQ1pPdmJPLWpKOGRQWHBfUzhza25JNl93Nm1wajkxMm5GVFFKemwwY00yWQ==","email":"wajiwos16@gmail.com"},"registry.redhat.io":{"auth":"fHVoYy1wb29sLTZiN2U1YjE4LWViMDItNDhkYy1hZTY1LWU1ZDFhMGI0ZGI3MTpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXdZelU0TldJMFpqQm1NekUwTjJZME9HVTVNV0U1T1RFMllUSXdOelk0WlNKOS52Q01sTnlXNVpyMjBiNXRyTm1UazNDQmZlTnZ3SERuQXh2SVNUSDJnTm1wT3pOSUppa29RUnFJMjZtcFF0QzktelFXSUVyZkV0clM0ZmgxTnBPVm5nRGEtTnltT3V0RG5FU3VUOXlJZ3B2VDRxMFFBVWhFdGdTei1aMk9oN3BXOVZzbWpxUjJwb2lJNzZhMUFjc1p2X3lHVDZaOXE5Um1HWGRpV0VFaG5WUUl2VmxvbDRxQS14aDJjOE9LMkc2LVR6VnNJSndzc09kVndjcWItX2JhRm5pV1JsS2lZNFpFQ3NCUjlJMmZHR3ZaSnBET3ZCcndlSEtuUFlwTS10WmtkTXVkeV9ZSWlxZ2ctbUg3ajR0dXhHRTdPck00bERjZk55R1BERGM4OHdZZExzMTNxS3NleVNuVmxmMjFRMFM0RDRiRWl0OUtCalZsR0p6alJ5YTFOSThLYk8za2hkdjhIT3hGLVZ1cXRocUJkV0NYcTVDYXF3ZlZ6T2ZPTnB1Zlc1VjJ0VmtwbFlFWmlhejczSjdpeEc2dHBrNnQyWWYtYmUzSjNRdHA0Q1pyX0hOVmkzZVBGRHMzX3JpbnA0UUs3LV9KWG16cHdkUGtLLWZYZ3UwSFZwZmlGM3ZYaTNmR204Q2t6MXBWUlpjR1dveTYzUmxZd210ZTAwZjlVT280RV9Tb1BpZFFReERGUDVtUWF3RDc5ZU53eDVKd1hDYWNVTXNMeFFNNGpSYjNiT0lqUXY5amo1QUNpQzJYSENRZDZKZzJhekxQWWNlWEJpRnRISEhwY2Q4Yl9zRkt0d1lZRjM4UGN6cFJFNzdodGp6NExEdGwzRFdmS0p6dnRPU0dIQ1pPdmJPLWpKOGRQWHBfUzhza25JNl93Nm1wajkxMm5GVFFKemwwY00yWQ==","email":"wajiwos16@gmail.com"}}}'

# openshift pull secret file: <https://console.redhat.com/openshift/install/metal/user-provisioned>

helper:

name: "bastion" #hostname for your helper node

ipaddr: "192.168.229.1" #current IP address of the helper

networkifacename: "ens224" #interface of the helper node,ACTUAL name of the interface, NOT the NetworkManager name

dns:

domain: "spelix.com" #DNS server domain. Should match baseDomain inside the install-config.yaml file.

clusterid: "ocp" #needs to match what you will for metadata.name inside the install-config.yaml file

forwarder1: "192.168.229.1" #DNS forwarder

forwarder2: "8.8.8.8" #second DNS forwarder

lb_ipaddr: "{{ helper.ipaddr }}" #Load balancer IP, it is optional, the default value is helper.ipaddr

dhcp:

router: "192.168.229.1" #default gateway of the network assigned to the masters/workers

bcast: "192.168.229.255" #broadcast address for your network

netmask: "255.255.255.0" #netmask that gets assigned to your masters/workers

poolstart: "192.168.229.200" #First address in your dhcp address pool

poolend: "192.168.229.220" #Last address in your dhcp address pool

ipid: "192.168.229.0" #ip network id for the range

netmaskid: "255.255.255.0" #networkmask id for the range.

ntp: "192.168.229.1" #ntp server address

dns: "" #domain name server, it is optional, the default value is set to helper.ipaddr

bootstrap:

name: "bootstrap" #hostname (WITHOUT the fqdn) of the bootstrap node

ipaddr: "192.168.229.200" #IP address that you want set for bootstrap node

macaddr: "00:50:56:bd:e6:ad" #The mac address for dhcp reservation

masters:

- name: "master01" #hostname (WITHOUT the fqdn) of the master node (x of 3)

ipaddr: "192.168.229.201" #The IP address (x of 3) that you want set

macaddr: "00:50:56:bd:55:a1" #The mac address for dhcp reservation

- name: "master02"

ipaddr: "192.168.229.202"

macaddr: "00:50:56:bd:5c:b2"

- name: "master03"

ipaddr: "192.168.229.203"

macaddr: "00:50:56:bd:ce:53"

workers:

- name: "worker01" #hostname (WITHOUT the fqdn) of the worker node you want to set

ipaddr: "192.168.229.211" #The IP address that you want set (1st node)

macaddr: "00:50:56:bd:76:b0" #The mac address for dhcp reservation (1st node)

- name: "worker02"

ipaddr: "192.168.229.212"

macaddr: "00:50:56:bd:06:0e"

- name: "worker03"

ipaddr: "192.168.229.213"

macaddr: "00:50:56:bd:32:82"

### For 9th Cluster

---

client_files_url: "<https://mirror.openshift.com/pub/openshift-v4/clients/ocp/4.10.37>"

installation_files_url: "<https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.10/4.10.37>"

ocp_client_files:

- openshift-client-linux.tar.gz

- openshift-install-linux.tar.gz

rhcos_binaries:

- rhcos-live-initramfs.x86_64.img

- rhcos-live-kernel-x86_64

- rhcos-live-rootfs.x86_64.img

ipconfig: "dhcp"

ppc64le: false

uefi: true

disk: sda #disk where you are installing RHCOS on the masters/workers

networkname:

external: "ens192"

internal: "ens224"

ocp:

root_dir: "/root"

network: "OVNKubernetes" # openshift network OpenShiftSDN/OVNKubernetes

sshkey: 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDUs0fFARly9xt87GwDCpYmmJW17kIedUcOsYn6cxQIklMm4o0H0C7lZA3XZhOJ/j1PXn5wpTOkEX0x7eyl0mxXNlvGneyhrXRvsy/VCzRzBAoUDihIVqsCyi6ahT0UzLRDJB7X+g3JDRpWEN2IzcWZ9yvlizq6s5S3Q0EXIh/4g2c+LXzl/mzaeYG1BvR6DSNDRSCxguPSG/+bmzZDjTozUhz5vrLtEvpAQEzejWXp45PxThUhi27Qu285i/DtGuMnEeWIUX0+yiVMIwZe0jhdVxoE1lucR4dpt/OM7dPhxo950YkYoA48bsDZVFittlLMvUIFoMkWAcJ8k8IMmfk3GqgcKoRnAf4yEmRr5+eKGXc7DOc9uvxqeaFEq8WP2e4jXuev6MqH19zq7579Tno04Ik/x2/TcJ9sPFORpRjPLum9OtZXiEFf6yoRYkjFEsLH3OnUJDBy+wQiwnYi1UuuTyBSQd/vXWQ0Bns0uXJMHGd3wrIgvGFsnX/ssqIlX+v77m/sCvxJo5W39FQBOSQ1/giAvs6KRXD3LdIG/GVWJxi+Q2OA42ylJQq2O9ZAfDENl4KkpgehRB0ahxU0GMiR09GUkki4Tw8JSDx3ft8BncIGoLbpF8mBNU1ChSx8Ukk9TW6RJWXi7nClF+tfzyBH2wWMssfndn8W66RFlxD86Q== root@bastion'

secret: '{"auths":{"cloud.openshift.com":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfMGYzMmNjZjRmMjI5NGFjNWEzOTU4ZWJiZjM5Y2VjZWQ6Q0dVUjRKVTRaNUNRMUtIRVJEVlA2VDFOTFBKM1lRMFJISUdaREw5UzBENDhaMDgxUVoxQ0ZQUlhRRkpSNDlKTw==","email":"wajiwos16@gmail.com"},"quay.io":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfMGYzMmNjZjRmMjI5NGFjNWEzOTU4ZWJiZjM5Y2VjZWQ6Q0dVUjRKVTRaNUNRMUtIRVJEVlA2VDFOTFBKM1lRMFJISUdaREw5UzBENDhaMDgxUVoxQ0ZQUlhRRkpSNDlKTw==","email":"wajiwos16@gmail.com"},"registry.connect.redhat.com":{"auth":"fHVoYy1wb29sLTZiN2U1YjE4LWViMDItNDhkYy1hZTY1LWU1ZDFhMGI0ZGI3MTpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXdZelU0TldJMFpqQm1NekUwTjJZME9HVTVNV0U1T1RFMllUSXdOelk0WlNKOS52Q01sTnlXNVpyMjBiNXRyTm1UazNDQmZlTnZ3SERuQXh2SVNUSDJnTm1wT3pOSUppa29RUnFJMjZtcFF0QzktelFXSUVyZkV0clM0ZmgxTnBPVm5nRGEtTnltT3V0RG5FU3VUOXlJZ3B2VDRxMFFBVWhFdGdTei1aMk9oN3BXOVZzbWpxUjJwb2lJNzZhMUFjc1p2X3lHVDZaOXE5Um1HWGRpV0VFaG5WUUl2VmxvbDRxQS14aDJjOE9LMkc2LVR6VnNJSndzc09kVndjcWItX2JhRm5pV1JsS2lZNFpFQ3NCUjlJMmZHR3ZaSnBET3ZCcndlSEtuUFlwTS10WmtkTXVkeV9ZSWlxZ2ctbUg3ajR0dXhHRTdPck00bERjZk55R1BERGM4OHdZZExzMTNxS3NleVNuVmxmMjFRMFM0RDRiRWl0OUtCalZsR0p6alJ5YTFOSThLYk8za2hkdjhIT3hGLVZ1cXRocUJkV0NYcTVDYXF3ZlZ6T2ZPTnB1Zlc1VjJ0VmtwbFlFWmlhejczSjdpeEc2dHBrNnQyWWYtYmUzSjNRdHA0Q1pyX0hOVmkzZVBGRHMzX3JpbnA0UUs3LV9KWG16cHdkUGtLLWZYZ3UwSFZwZmlGM3ZYaTNmR204Q2t6MXBWUlpjR1dveTYzUmxZd210ZTAwZjlVT280RV9Tb1BpZFFReERGUDVtUWF3RDc5ZU53eDVKd1hDYWNVTXNMeFFNNGpSYjNiT0lqUXY5amo1QUNpQzJYSENRZDZKZzJhekxQWWNlWEJpRnRISEhwY2Q4Yl9zRkt0d1lZRjM4UGN6cFJFNzdodGp6NExEdGwzRFdmS0p6dnRPU0dIQ1pPdmJPLWpKOGRQWHBfUzhza25JNl93Nm1wajkxMm5GVFFKemwwY00yWQ==","email":"wajiwos16@gmail.com"},"registry.redhat.io":{"auth":"fHVoYy1wb29sLTZiN2U1YjE4LWViMDItNDhkYy1hZTY1LWU1ZDFhMGI0ZGI3MTpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXdZelU0TldJMFpqQm1NekUwTjJZME9HVTVNV0U1T1RFMllUSXdOelk0WlNKOS52Q01sTnlXNVpyMjBiNXRyTm1UazNDQmZlTnZ3SERuQXh2SVNUSDJnTm1wT3pOSUppa29RUnFJMjZtcFF0QzktelFXSUVyZkV0clM0ZmgxTnBPVm5nRGEtTnltT3V0RG5FU3VUOXlJZ3B2VDRxMFFBVWhFdGdTei1aMk9oN3BXOVZzbWpxUjJwb2lJNzZhMUFjc1p2X3lHVDZaOXE5Um1HWGRpV0VFaG5WUUl2VmxvbDRxQS14aDJjOE9LMkc2LVR6VnNJSndzc09kVndjcWItX2JhRm5pV1JsS2lZNFpFQ3NCUjlJMmZHR3ZaSnBET3ZCcndlSEtuUFlwTS10WmtkTXVkeV9ZSWlxZ2ctbUg3ajR0dXhHRTdPck00bERjZk55R1BERGM4OHdZZExzMTNxS3NleVNuVmxmMjFRMFM0RDRiRWl0OUtCalZsR0p6alJ5YTFOSThLYk8za2hkdjhIT3hGLVZ1cXRocUJkV0NYcTVDYXF3ZlZ6T2ZPTnB1Zlc1VjJ0VmtwbFlFWmlhejczSjdpeEc2dHBrNnQyWWYtYmUzSjNRdHA0Q1pyX0hOVmkzZVBGRHMzX3JpbnA0UUs3LV9KWG16cHdkUGtLLWZYZ3UwSFZwZmlGM3ZYaTNmR204Q2t6MXBWUlpjR1dveTYzUmxZd210ZTAwZjlVT280RV9Tb1BpZFFReERGUDVtUWF3RDc5ZU53eDVKd1hDYWNVTXNMeFFNNGpSYjNiT0lqUXY5amo1QUNpQzJYSENRZDZKZzJhekxQWWNlWEJpRnRISEhwY2Q4Yl9zRkt0d1lZRjM4UGN6cFJFNzdodGp6NExEdGwzRFdmS0p6dnRPU0dIQ1pPdmJPLWpKOGRQWHBfUzhza25JNl93Nm1wajkxMm5GVFFKemwwY00yWQ==","email":"wajiwos16@gmail.com"}}}'

# openshift pull secret file: <https://console.redhat.com/openshift/install/metal/user-provisioned>

helper:

name: "bastion" #hostname for your helper node

ipaddr: "192.168.228.1" #current IP address of the helper

networkifacename: "ens224" #interface of the helper node,ACTUAL name of the interface, NOT the NetworkManager name

dns:

domain: "spelix2.com" #DNS server domain. Should match baseDomain inside the install-config.yaml file.

clusterid: "ocp" #needs to match what you will for metadata.name inside the install-config.yaml file

forwarder1: "192.168.228.1" #DNS forwarder

forwarder2: "8.8.8.8" #second DNS forwarder

lb_ipaddr: "{{ helper.ipaddr }}" #Load balancer IP, it is optional, the default value is helper.ipaddr

dhcp:

router: "192.168.228.1" #default gateway of the network assigned to the masters/workers

bcast: "192.168.228.255" #broadcast address for your network

netmask: "255.255.255.0" #netmask that gets assigned to your masters/workers

poolstart: "192.168.228.200" #First address in your dhcp address pool

poolend: "192.168.228.220" #Last address in your dhcp address pool

ipid: "192.168.228.0" #ip network id for the range

netmaskid: "255.255.255.0" #networkmask id for the range.

ntp: "192.168.228.1" #ntp server address

dns: "" #domain name server, it is optional, the default value is set to helper.ipaddr

bootstrap:

name: "bootstrap" #hostname (WITHOUT the fqdn) of the bootstrap node

ipaddr: "192.168.228.200" #IP address that you want set for bootstrap node

macaddr: "00:50:56:bd:e3:eb" #The mac address for dhcp reservation

masters:

- name: "master01" #hostname (WITHOUT the fqdn) of the master node (x of 3)

ipaddr: "192.168.228.201" #The IP address (x of 3) that you want set

macaddr: "00:50:56:bd:bb:e6" #The mac address for dhcp reservation

- name: "master02"

ipaddr: "192.168.228.202"

macaddr: "00:50:56:bd:9b:7b"

- name: "master03"

ipaddr: "192.168.228.203"

macaddr: "00:50:56:bd:ee:19"

workers:

- name: "worker01" #hostname (WITHOUT the fqdn) of the worker node you want to set

ipaddr: "192.168.228.211" #The IP address that you want set (1st node)

macaddr: "00:50:56:bd:23:b7" #The mac address for dhcp reservation (1st node)

- name: "worker02"

ipaddr: "192.168.228.212"

macaddr: "00:50:56:bd:f7:9c"

- name: "worker03"

ipaddr: "192.168.228.213"

macaddr: "00:50:56:bd:77:69"

Recheck all the values (IP address, MAC, pull secret, sshkey, domain name and then continue)

Then we need all of the installation files

# From Windows Powershell we have a 'roles' folder with all of the installation files

scp -r .\\roles\\ root@10.1.10.230:/root/ansible/

root@10.1.10.230's password:

main.yaml 100% 1874 328.4KB/s 00:00

main.yaml 100% 169 33.7KB/s 00:00

install-config.yaml.j2 100% 583 114.0KB/s 00:00

main.yaml 100% 155 15.2KB/s 00:00

main.yaml 100% 1793 350.2KB/s 00:00

default.j2 100% 1484 159.1KB/s 00:00

main.yaml 100% 285 28.3KB/s 00:00

dhcpd.conf.j2 100% 1894 189.7KB/s 00:00

main.yaml 100% 1397 133.5KB/s 00:00

dns.tar 100% 11KB 734.8KB/s 00:00

named.conf.j2 100% 1728 168.9KB/s 00:00

named.rfc1912.zones.j2 100% 1856 378.1KB/s 00:00

ocp.zones.j2 100% 2507 240.8KB/s 00:00

reverse.rev.j2 100% 1372 134.0KB/s 00:00

main.yaml 100% 274 26.8KB/s 00:00

haproxy.cfg.j2 100% 4691 466.7KB/s 00:00

main.yaml 100% 280 54.7KB/s 00:00

httpd.conf.j2 100% 12KB 783.3KB/s 00:00

ldlinux.c32 100% 113KB 5.5MB/s 00:00

libutil.c32 100% 22KB 2.2MB/s 00:00

menu.c32 100% 26KB 2.5MB/s 00:00

pxelinux.0 100% 41KB 2.7MB/s 00:00

tftp.tar 100% 207KB 10.1MB/s 00:00

main.yaml 100% 564 53.7KB/s 00:00

Then we need to add the localhost as below

vim inventory/ocp

[localhost]

127.0.0.1

Then inside the ansible directory, we need to use the following to install and check all of the yamls

# To list and see the 순서of installation

ansible-playbook -i inventory/ocp playbook/ocp.yaml --list-tags

# Installing

ansible-playbook -i inventory/ocp playbook/ocp.yaml --tags <name from the list>

Before using the above, we need to add the localhost as below

vim inventory/ocp

[localhost]

127.0.0.1

After doing all ansible-playbook commands, we need to rename the openshift installer yaml file like the following:

mv /root/openshift/config/install-cnfig.yaml /root/openshift/config/install-config.yaml

The install-config.yaml file looks like this:

apiVersion: v1

baseDomain: spelix2.com

compute:

- hyperthreading: Enabled

name: worker

replicas: 0

controlPlane:

hyperthreading: Enabled

name: master

replicas: 3

metadata:

name: ocp

networking:

clusterNetworks:

- cidr: 10.128.0.0/14

hostPrefix: 23

networkType: OVNKubernetes

serviceNetwork:

- 172.30.0.0/16

platform:

none: {}

fips: false

pullSecret: '{"auths":{"cloud.openshift.com":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfMGYzMmNjZjRmMjI5NGFjNWEzOTU4ZWJiZjM5Y2VjZWQ6Q0dVUjRKVTRaNUNRMUtIRVJEVlA2VDFOTFBKM1lRMFJISUdaREw5UzBENDhaMDgxUVoxQ0ZQUlhRRkpSNDlKTw==","email":"wajiwos16@gmail.com"},"quay.io":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfMGYzMmNjZjRmMjI5NGFjNWEzOTU4ZWJiZjM5Y2VjZWQ6Q0dVUjRKVTRaNUNRMUtIRVJEVlA2VDFOTFBKM1lRMFJISUdaREw5UzBENDhaMDgxUVoxQ0ZQUlhRRkpSNDlKTw==","email":"wajiwos16@gmail.com"},"registry.connect.redhat.com":{"auth":"fHVoYy1wb29sLTZiN2U1YjE4LWViMDItNDhkYy1hZTY1LWU1ZDFhMGI0ZGI3MTpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXdZelU0TldJMFpqQm1NekUwTjJZME9HVTVNV0U1T1RFMllUSXdOelk0WlNKOS52Q01sTnlXNVpyMjBiNXRyTm1UazNDQmZlTnZ3SERuQXh2SVNUSDJnTm1wT3pOSUppa29RUnFJMjZtcFF0QzktelFXSUVyZkV0clM0ZmgxTnBPVm5nRGEtTnltT3V0RG5FU3VUOXlJZ3B2VDRxMFFBVWhFdGdTei1aMk9oN3BXOVZzbWpxUjJwb2lJNzZhMUFjc1p2X3lHVDZaOXE5Um1HWGRpV0VFaG5WUUl2VmxvbDRxQS14aDJjOE9LMkc2LVR6VnNJSndzc09kVndjcWItX2JhRm5pV1JsS2lZNFpFQ3NCUjlJMmZHR3ZaSnBET3ZCcndlSEtuUFlwTS10WmtkTXVkeV9ZSWlxZ2ctbUg3ajR0dXhHRTdPck00bERjZk55R1BERGM4OHdZZExzMTNxS3NleVNuVmxmMjFRMFM0RDRiRWl0OUtCalZsR0p6alJ5YTFOSThLYk8za2hkdjhIT3hGLVZ1cXRocUJkV0NYcTVDYXF3ZlZ6T2ZPTnB1Zlc1VjJ0VmtwbFlFWmlhejczSjdpeEc2dHBrNnQyWWYtYmUzSjNRdHA0Q1pyX0hOVmkzZVBGRHMzX3JpbnA0UUs3LV9KWG16cHdkUGtLLWZYZ3UwSFZwZmlGM3ZYaTNmR204Q2t6MXBWUlpjR1dveTYzUmxZd210ZTAwZjlVT280RV9Tb1BpZFFReERGUDVtUWF3RDc5ZU53eDVKd1hDYWNVTXNMeFFNNGpSYjNiT0lqUXY5amo1QUNpQzJYSENRZDZKZzJhekxQWWNlWEJpRnRISEhwY2Q4Yl9zRkt0d1lZRjM4UGN6cFJFNzdodGp6NExEdGwzRFdmS0p6dnRPU0dIQ1pPdmJPLWpKOGRQWHBfUzhza25JNl93Nm1wajkxMm5GVFFKemwwY00yWQ==","email":"wajiwos16@gmail.com"},"registry.redhat.io":{"auth":"fHVoYy1wb29sLTZiN2U1YjE4LWViMDItNDhkYy1hZTY1LWU1ZDFhMGI0ZGI3MTpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSXdZelU0TldJMFpqQm1NekUwTjJZME9HVTVNV0U1T1RFMllUSXdOelk0WlNKOS52Q01sTnlXNVpyMjBiNXRyTm1UazNDQmZlTnZ3SERuQXh2SVNUSDJnTm1wT3pOSUppa29RUnFJMjZtcFF0QzktelFXSUVyZkV0clM0ZmgxTnBPVm5nRGEtTnltT3V0RG5FU3VUOXlJZ3B2VDRxMFFBVWhFdGdTei1aMk9oN3BXOVZzbWpxUjJwb2lJNzZhMUFjc1p2X3lHVDZaOXE5Um1HWGRpV0VFaG5WUUl2VmxvbDRxQS14aDJjOE9LMkc2LVR6VnNJSndzc09kVndjcWItX2JhRm5pV1JsS2lZNFpFQ3NCUjlJMmZHR3ZaSnBET3ZCcndlSEtuUFlwTS10WmtkTXVkeV9ZSWlxZ2ctbUg3ajR0dXhHRTdPck00bERjZk55R1BERGM4OHdZZExzMTNxS3NleVNuVmxmMjFRMFM0RDRiRWl0OUtCalZsR0p6alJ5YTFOSThLYk8za2hkdjhIT3hGLVZ1cXRocUJkV0NYcTVDYXF3ZlZ6T2ZPTnB1Zlc1VjJ0VmtwbFlFWmlhejczSjdpeEc2dHBrNnQyWWYtYmUzSjNRdHA0Q1pyX0hOVmkzZVBGRHMzX3JpbnA0UUs3LV9KWG16cHdkUGtLLWZYZ3UwSFZwZmlGM3ZYaTNmR204Q2t6MXBWUlpjR1dveTYzUmxZd210ZTAwZjlVT280RV9Tb1BpZFFReERGUDVtUWF3RDc5ZU53eDVKd1hDYWNVTXNMeFFNNGpSYjNiT0lqUXY5amo1QUNpQzJYSENRZDZKZzJhekxQWWNlWEJpRnRISEhwY2Q4Yl9zRkt0d1lZRjM4UGN6cFJFNzdodGp6NExEdGwzRFdmS0p6dnRPU0dIQ1pPdmJPLWpKOGRQWHBfUzhza25JNl93Nm1wajkxMm5GVFFKemwwY00yWQ==","email":"wajiwos16@gmail.com"}}}'

sshKey: 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDMkx7b+ZEpfQhWs0SrCeUXiR8d00ST+f43tEpaLtNVOSE+GwlwmbuaOPqOBM4Efjw8BxiZQ2JX506t6W7zDZ4UK2XbpkjnLbYJxAy4gPK7/ZVr6MI6Fn7zwhOFC2sB31bb9RtfYcNeXQ4tCHdNy/DZsIrE/rEH3CrCzYiQspKHYL5ZvNiQo/9eu7cJ/MeZTB+TvkFV9m/GabQtr72q2FdnLdic0B3a4tM42M2WVbMUlP5zFyr8SeDLrTOOP6nygqlViqfvF8KUIJkNNqwvIkchaepZ82FVnnrpM81o3n3UohhRRnuHe/LAOiGbQDrXgxWGRan9xdS3i0IV4hwtxKoc/d+a68AgyBQ/j5jANUWtQ2z5cls4nJo4g7d+h4UZ+MOVQvrN+QHJMq2Nb1QmfPi8OAaFIfUldFwcgoDeZ8A0UllUVmYE1jcehbCSS9VqCX5wr9jT8oVzf8E7iN2WEbh/nwxX4OGjYQwZo+iXegE7HG44WCaFtYugz5MQjVcxvya4X3kpUOztK89GK5R4LYO9yekBjQcopcSUS6MEj6zbZcb5+yiO/ovelZTCdi/W2THsvQHmeibhb1W896oSTLG8xDbKP7z/V4LJTvBd1DuQ17VO2UaFcUIZirmggTvV0c+HQtDZdKWujaIk94esirm2MCJQykPATXxvjiLSG8rfYw== root@bastion'

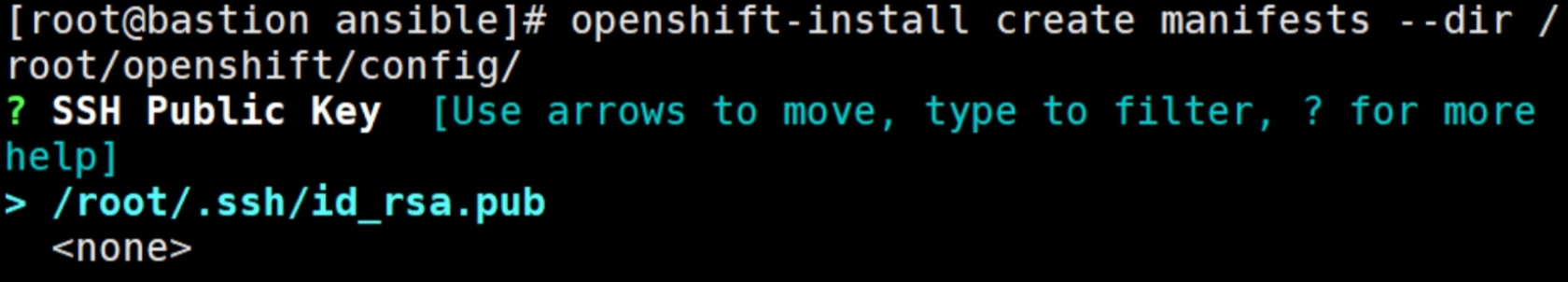

Then we need to

mv /root/openshift/config/install-cnfig.yaml /root/openshift/config/install-config.yaml

cd /root/openshift/config

[root@bastion config]# ls

install-config.yaml

[root@bastion config]# openshift-install create manifests

INFO Consuming Install Config from target directory

WARNING Making control-plane schedulable by setting MastersSchedulable to true for Scheduler cluster settings

INFO Manifests created in: manifests and openshift

[root@bastion config]# ls

manifests openshift

Then we need to install ignition files

openshift-install create ignition-configs

INFO Consuming Master Machines from target directory

INFO Consuming Openshift Manifests from target directory

INFO Consuming OpenShift Install (Manifests) from target directory

INFO Consuming Worker Machines from target directory

INFO Consuming Common Manifests from target directory

INFO Ignition-Configs created in: . and auth

[root@bastion config]# ls

auth bootstrap.ign master.ign metadata.json worker.ign

Then we need to move the .ign files to /var/www/html/ign,

ls

auth bootstrap.ign master.ign metadata.json worker.ign

[root@bastion config]# mv /root/openshift/config/worker.ign /var/www/html/ign/

[root@bastion config]# mv /root/openshift/config/master.ign /var/www/html/ign/

[root@bastion config]# mv /root/openshift/config/bootstrap.ign /var/www/html/ign/

[root@bastion config]# ls

auth metadata.json

[root@bastion config]# ls /var/www/html/ign/

bootstrap.ign master.ign worker.ign

Then give apache ownership

chown -R apache:apache /var/www/html/*

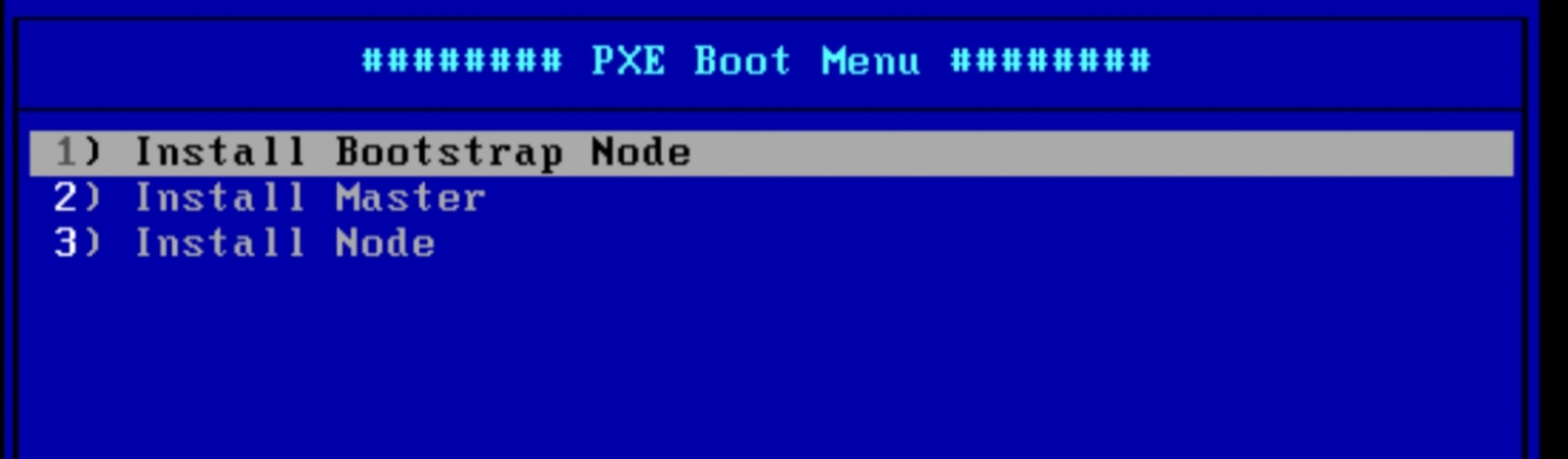

After all settings on Bastion is done,

we can turn on the Bootstrap node and install it

Then we need to check if we can ssh to bootstrap node from our bastion

ssh core@bootstrap.ocp.spelix.com

[core@bootstrap ~]$

Then we need to turn on our 3 master nodes one by one and select the ‘Install Master’ option

The same goes for the 3 worker nodes ‘Install Node’ option to be selected

Then we need to export the KUBECONFIG file to use the ‘oc’ command

export KUBECONFIG=/root/openshift/config/auth/kubeconfig

source /root/.bashrc

Then approve the certificates

oc get csr -o name | xargs oc adm certificate approve

We can check the csr status

oc get csr

the values should be ‘Approved’ instead of ‘Pending’ so we can use the approve certificates command again if we see any pending

Then if we see the nodes

oc get nodes

NAME STATUS ROLES AGE VERSION

master01.ocp.spelix.com Ready master,worker 12m v1.23.5+8471591

master02.ocp.spelix.com Ready master,worker 12m v1.23.5+8471591

master03.ocp.spelix.com Ready master,worker 11m v1.23.5+8471591

worker01.ocp.spelix.com Ready master,worker 11m v1.23.5+8471591

worker02.ocp.spelix.com Ready worker 72s v1.23.5+8471591

worker03.ocp.spelix.com Ready worker 74s v1.23.5+8471591

## on 10th cluster

oc get nodes

NAME STATUS ROLES AGE VERSION

master01.ocp.spelix.com Ready master,worker 12m v1.23.5+8471591

master02.ocp.spelix.com Ready master,worker 12m v1.23.5+8471591

master03.ocp.spelix.com Ready master,worker 12m v1.23.5+8471591

worker01.ocp.spelix.com Ready worker 78s v1.23.5+8471591

worker02.ocp.spelix.com Ready worker 77s v1.23.5+8471591

worker03.ocp.spelix.com Ready worker 88s v1.23.5+8471591

(I mistakenly set worker1 as master node during the installation of PXE bootstrap)

Then we need to add the bastion IP and the following hostnames to the windows host file

<bastion-ip> api.ocp.cpf.com console-openshift-console.apps.ocp.cpf.com oauth-openshift.apps.ocp.cpf.com downloads-openshift-console.apps.ocp.cpf.com alertmanager-main-openshift-monitoring.apps.ocp.cpf.com grafana-openshift-monitoring.apps.ocp.cpf.com prometheus-k8s-openshift-monitoring.apps.ocp.cpf.com thanos-querier-openshift-monitoring.apps.ocp.cpf.com

After that we need to follow the notion page from the Openshift 교육 as it is

Just remember to apply all of the nfs yaml files while doing the nfs part

deployment.yaml

rbac.yaml

class.yaml

test-pod.yaml

test-pvc.yaml