sunbeam/beta 버전 설치 및 설정

설치 과정은 수동과 자동 방식이 있다. 자동 방식은 아래와 같이 추가 설정 없이 명령어 2개로 모든 설치 과정이 끝난다.

sudo snap install microstack --channel sunbeam/edge

microstack install-script | bash -x설치 스크립트를 통해 기본 설정으로 Microk8s과 MicroStack 설치가 완료 된다. 하지만 수동으로 설정 할 시 필요한 패키지 버전들과 custom 설정들을 할 수 있다.

수동으로 설치를 진행 해보았다. 일단 제일 먼저 해야 되는 것은 네트워크 인터페이스 상태 확인 이다.

ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:ab:7d:5f brd ff:ff:ff:ff:ff:ff

altname enp2s0

inet 192.168.1.80/24 brd 192.168.1.255 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feab:7d5f/64 scope link

valid_lft forever preferred_lft forever

3: ens34: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:0c:29:ab:7d:69 brd ff:ff:ff:ff:ff:ff

altname enp2s2보이는것 과 같이 ens32는 현재 가상 머신의 네트워크 인터페이스이고, ens34는 네트워크 부여 없는 추가 인터페이스이다. 추가 적인 인터페이스는 나중에 OpenStack용으로 사용 될 것이다.

먼저 microk8s를 설치 한다

sudo snap install microk8s --channel 1.25-strict/stable

microk8s (1.25-strict/stable) v1.25.10 from Canonical✓ installed그 후 microk8s 상태를 확인 한다

sudo microk8s status --wait-ready

microk8s is running

high-availability: no

datastore master nodes: 127.0.0.1:19001

datastore standby nodes: none

addons:

enabled:

ha-cluster # (core) Configure high availability on the current node

helm # (core) Helm - the package manager for Kubernetes

helm3 # (core) Helm 3 - the package manager for Kubernetes

disabled:

cert-manager # (core) Cloud native certificate management

community # (core) The community addons repository

dashboard # (core) The Kubernetes dashboard

dns # (core) CoreDNS

host-access # (core) Allow Pods connecting to Host services smoothly

hostpath-storage # (core) Storage class; allocates storage from host directory

ingress # (core) Ingress controller for external access

mayastor # (core) OpenEBS MayaStor

metallb # (core) Loadbalancer for your Kubernetes cluster

metrics-server # (core) K8s Metrics Server for API access to service metrics

observability # (core) A lightweight observability stack for logs, traces and metrics

prometheus # (core) Prometheus operator for monitoring and logging

rbac # (core) Role-Based Access Control for authorisation

registry # (core) Private image registry exposed on localhost:32000

storage # (core) Alias to hostpath-storage add-on, deprecated

상태 확인 후 dns 서비스 부터 활성화 한다.

sudo microk8s enable dns:8.8.8.8,8.8.4.4

Infer repository core for addon dns

Enabling DNS

Applying manifest

serviceaccount/coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

clusterrole.rbac.authorization.k8s.io/coredns created

clusterrolebinding.rbac.authorization.k8s.io/coredns created

Restarting kubelet

DNS is enabled기본적으로 host의 백엔드 스토리지를 사용하기 위해 storage 서비스 활성화 한다

sudo microk8s enable hostpath-storage

Infer repository core for addon hostpath-storage

Enabling default storage class.

WARNING: Hostpath storage is not suitable for production environments.

deployment.apps/hostpath-provisioner created

storageclass.storage.k8s.io/microk8s-hostpath created

serviceaccount/microk8s-hostpath created

clusterrole.rbac.authorization.k8s.io/microk8s-hostpath created

clusterrolebinding.rbac.authorization.k8s.io/microk8s-hostpath created

Storage will be available soon.다음으로 로드 밸런서 서비스인 Metallb를 활성화 한다. IP 주소 풀은 가상 머신과 동일한 네트워크 이야 된다.

Metallb는 BareMetal 쿠버네티스 환경에서 로드 밸런서를 구성하기 위한 오픈 소스 프로젝트

공식 DOC 사이트는 여기

# 현재 네트워크속 비워있는 10개 정도의 IP를 부여

sudo microk8s enable metallb 192.168.1.200-192.168.1.210

Infer repository core for addon metallb

Enabling MetalLB

Applying Metallb manifest

customresourcedefinition.apiextensions.k8s.io/addresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

namespace/metallb-system created

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

secret/webhook-server-cert created

service/webhook-service created

rolebinding.rbac.authorization.k8s.io/pod-lister created

daemonset.apps/speaker created

deployment.apps/controller created

validatingwebhookconfiguration.admissionregistration.k8s.io/validating-webhook-configuration created

Waiting for Metallb controller to be ready.

error: timed out waiting for the condition on deployments/controller

MetalLB controller is still not ready

deployment.apps/controller condition met

ipaddresspool.metallb.io/default-addresspool created

l2advertisement.metallb.io/default-advertise-all-pools created

MetalLB is enabledOpenStack API에 로컬 호스트 이외의 호스트에서 액세스하려는 경우, 이 IP 주소 범위는 기본 네트워크 인터페이스의 서브넷 내에 있어야 한다. 해당 범위에는 최소한 10개의 주소가 포함되어야 한다.

로드 밸런서 설정 완료 후 현재 사용중인 user에거 microk8s를 사용할 수 있게 권한을 부여 및 그룹에 추가 한다.

sudo usermod -a -G snap_microk8s $USER

sudo chown -f -R $USER ~/.kube

newgrp snap_microk8s추가로 아래의 명령어는 /var/snap/microk8s/current/var/lock/ 디렉터리 안에 no-cert-reissue 라는 이름의 파일을 생성한다. 이 파일이 존재하는 경우, microk8s를 다시 시작하지 말아야 한다는 플래그로 작용할 수 있다.

touch /var/snap/microk8s/current/var/lock/no-cert-reissue 이후 Juju를 설치 한다. 설치 완료 후.local/share 디렉터리를 생성함으로써 Juju가 데이터를 저장하고 구성 파일을 유지하는 지정된 위치를 확보한다.

Juju는 'Charmed Operator' 프레임 워크으로서 MicroStack을 위한 배포, 관리 및 운영할 수 있는 오픈 소스 플랫폼.

더 자세한 정보는 여기

sudo snap install juju --channel 3.1/stable

juju (3.1/stable) 3.1.2 from Canonical✓ installed

# juju의 설정들이 여기에 저장 된다

mkdir -p .local/share마지막으로 필요한 패키지들을 설치 한다

# microstack 패키지 설치

sudo snap install microstack --channel sunbeam/beta

microstack (sunbeam/edge) yoga from Canonical✓ installed

# 가상화 layer를 담담하기 위해

sudo snap install --channel yoga/beta openstack-hypervisor

openstack-hypervisor (yoga/beta) yoga from Canonical✓ installed

# Openstack CLI 명렁어를 사용하기 위해

sudo snap install openstackclients --channel xena/stable

openstackclients (xena/stable) xena from Canonical✓ installed이제 필요한 요소들은 다 설치 되었으나 microstack을 자동으로 쿠버네티스에 올리기 위해 Juju를 사용 하여 bootstrapping 작업을 해야 한다. Juju Bootstrapping은 Juju를 클라우드나 인프라 환경에서 초기 설정하는 과정을 말한다.

# Juju Bootstrapping 시작 명령어

microstack bootstrap

Checking for presence of Juju ... done

Checking for presence of microk8s ... done

Checking for presence of openstack-hypervisor ... done

Checking health of openstack-hypervisor ... done

Bootstrapping Juju into microk8s ... done

Initializing Terraform from provider mirror ... done

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

unknown facade SecretBackendsManager

unexpected facade SecretBackendsManager found, unable to decipher version to use

unknown facade SecretBackendsRotateWatcher

unexpected facade SecretBackendsRotateWatcher found, unable to decipher version to use

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

Deploying OpenStack Control Plane to Kubernetes ... done

unknown facade SecretBackendsManager

unexpected facade SecretBackendsManager found, unable to decipher version to use

unknown facade SecretBackendsRotateWatcher

unexpected facade SecretBackendsRotateWatcher found, unable to decipher version to use

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

Updating hypervisor identity configuration ... done

unknown facade SecretBackendsManager

unexpected facade SecretBackendsManager found, unable to decipher version to use

unknown facade SecretBackendsRotateWatcher

unexpected facade SecretBackendsRotateWatcher found, unable to decipher version to use

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

Updating hypervisor RabbitMQ configuration ... done

unknown facade SecretBackendsManager

unexpected facade SecretBackendsManager found, unable to decipher version to use

unknown facade SecretBackendsRotateWatcher

unexpected facade SecretBackendsRotateWatcher found, unable to decipher version to use

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

unknown facade SecretBackendsManager

unexpected facade SecretBackendsManager found, unable to decipher version to use

unknown facade SecretBackendsRotateWatcher

unexpected facade SecretBackendsRotateWatcher found, unable to decipher version to use

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

This version was tested using 3.1.0 juju version 3.1.2 may have compatibility issues

Updating hypervisor OVN configuration ... done

Node has been bootstrapped as a CONVERGED node이 작업을 실시간으로 보기 위해 현재 서버의 똑같은 유저로 2개의 새로운 콘솔을 접속 하여 아래와 같은 명령어를 사용 한다

# Juju 작업을 실시간 확인

watch --color -- juju status --color -m openstack

# 클러스터의 변경 사항 실시간 확인

watch microk8s.kubectl get all -ABootstrap 작업은 20 ~ 40분 사이 시간이 소요 된다

Bootstrap 작엄이 문제 없이 완료 되면 현재 OpenStack의 상태를 확인 할 수 있다

juju status --color -m openstack

Model Controller Cloud/Region Version SLA Timestamp

openstack microk8s-localhost microk8s/localhost 3.1.2 unsupported 18:08:14Z

App Version Status Scale Charm Channel Rev Address Exposed Message

certificate-authority active 1 tls-certificates-operator stable 22 10.152.183.141 no

glance active 1 glance-k8s yoga/beta 27 10.152.183.244 no

horizon active 1 horizon-k8s yoga/beta 32 10.152.183.184 no http://192.168.1.202:80/openstack-horizon

keystone active 1 keystone-k8s yoga/beta 82 10.152.183.186 no

mysql 8.0.32-0ubuntu0.22.04.2 active 1 mysql-k8s 8.0/stable 75 10.152.183.67 no Primary

neutron active 1 neutron-k8s yoga/beta 29 10.152.183.157 no

nova active 1 nova-k8s yoga/beta 20 10.152.183.254 no

ovn-central active 1 ovn-central-k8s 22.03/beta 35 10.152.183.99 no

ovn-relay active 1 ovn-relay-k8s 22.03/beta 25 192.168.1.200 no

placement active 1 placement-k8s yoga/beta 18 10.152.183.25 no

rabbitmq 3.11.3 active 1 rabbitmq-k8s 3.11/beta 10 192.168.1.201 no

traefik 2.9.6 active 1 traefik-k8s 1.0/stable 110 192.168.1.202 no

Unit Workload Agent Address Ports Message

certificate-authority/0* active idle 10.1.243.204

glance/0* active idle 10.1.243.207

horizon/0* active idle 10.1.243.213

keystone/0* active idle 10.1.243.218

mysql/0* active idle 10.1.243.202 Primary

neutron/0* active idle 10.1.243.208

nova/0* active idle 10.1.243.219

ovn-central/0* active idle 10.1.243.215

ovn-relay/0* active idle 10.1.243.210

placement/0* active idle 10.1.243.209

rabbitmq/0* active idle 10.1.243.212

traefik/0* active idle 10.1.243.205 동일하게 현재 클러스터 상태도 확인 할 수 있다

sudo microk8s.kubectl get all -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/calico-node-h2h2q 1/1 Running 0 39m

kube-system pod/coredns-d489fb88-j2mk2 1/1 Running 0 38m

kube-system pod/calico-kube-controllers-d8b9b6478-qkjnn 1/1 Running 0 39m

kube-system pod/hostpath-provisioner-766849dd9d-srkpk 1/1 Running 0 36m

metallb-system pod/controller-56c4696b5-spbpx 1/1 Running 0 36m

metallb-system pod/speaker-zsxfn 1/1 Running 0 36m

controller-microk8s-localhost pod/controller-0 3/3 Running 1 (21m ago) 22m

controller-microk8s-localhost pod/modeloperator-8449b84544-brbsv 1/1 Running 0 21m

openstack pod/modeloperator-c59b89747-mgll6 1/1 Running 0 21m

openstack pod/certificate-authority-0 1/1 Running 0 20m

openstack pod/mysql-0 2/2 Running 0 20m

openstack pod/glance-0 2/2 Running 0 20m

openstack pod/neutron-0 2/2 Running 0 20m

openstack pod/ovn-relay-0 2/2 Running 0 20m

openstack pod/rabbitmq-0 2/2 Running 0 19m

openstack pod/horizon-0 2/2 Running 0 19m

openstack pod/keystone-0 2/2 Running 0 19m

openstack pod/traefik-0 2/2 Running 0 20m

openstack pod/ovn-central-0 4/4 Running 0 19m

openstack pod/placement-0 2/2 Running 0 20m

openstack pod/nova-0 4/4 Running 0 18m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 39m

kube-system service/kube-dns ClusterIP 10.152.183.10 <none> 53/UDP,53/TCP,9153/TCP 38m

metallb-system service/webhook-service ClusterIP 10.152.183.27 <none> 443/TCP 36m

controller-microk8s-localhost service/controller-service ClusterIP 10.152.183.147 <none> 17070/TCP 22m

controller-microk8s-localhost service/modeloperator ClusterIP 10.152.183.146 <none> 17071/TCP 21m

openstack service/modeloperator ClusterIP 10.152.183.156 <none> 17071/TCP 21m

openstack service/mysql ClusterIP 10.152.183.67 <none> 65535/TCP 20m

openstack service/mysql-endpoints ClusterIP None <none> <none> 20m

openstack service/certificate-authority ClusterIP 10.152.183.141 <none> 65535/TCP 20m

openstack service/traefik-endpoints ClusterIP None <none> <none> 20m

openstack service/certificate-authority-endpoints ClusterIP None <none> <none> 20m

openstack service/glance-endpoints ClusterIP None <none> <none> 20m

openstack service/neutron-endpoints ClusterIP None <none> <none> 20m

openstack service/placement-endpoints ClusterIP None <none> <none> 20m

openstack service/ovn-relay-endpoints ClusterIP None <none> <none> 20m

openstack service/rabbitmq-endpoints ClusterIP None <none> <none> 20m

openstack service/ovn-central-endpoints ClusterIP None <none> <none> 20m

openstack service/horizon-endpoints ClusterIP None <none> <none> 20m

openstack service/neutron ClusterIP 10.152.183.157 <none> 9696/TCP 20m

openstack service/placement ClusterIP 10.152.183.25 <none> 8778/TCP 20m

openstack service/ovn-relay LoadBalancer 10.152.183.133 192.168.1.200 6642:31222/TCP 20m

openstack service/keystone-endpoints ClusterIP None <none> <none> 19m

openstack service/glance ClusterIP 10.152.183.244 <none> 9292/TCP 20m

openstack service/rabbitmq LoadBalancer 10.152.183.142 192.168.1.201 5672:31244/TCP,15672:31620/TCP 20m

openstack service/nova-endpoints ClusterIP None <none> <none> 19m

openstack service/horizon ClusterIP 10.152.183.184 <none> 80/TCP 20m

openstack service/ovn-central ClusterIP 10.152.183.99 <none> 6641/TCP,6642/TCP 20m

openstack service/keystone ClusterIP 10.152.183.186 <none> 5000/TCP 19m

openstack service/nova ClusterIP 10.152.183.254 <none> 8774/TCP 19m

openstack service/traefik LoadBalancer 10.152.183.60 192.168.1.202 80:31408/TCP,443:31255/TCP 20m

openstack service/mysql-primary ClusterIP 10.152.183.197 <none> 3306/TCP 16m

openstack service/mysql-replicas ClusterIP 10.152.183.90 <none> 3306/TCP 16m

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/calico-node 1 1 1 1 1 kubernetes.io/os=linux 39m

metallb-system daemonset.apps/speaker 1 1 1 1 1 kubernetes.io/os=linux 36m

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/calico-kube-controllers 1/1 1 1 39m

kube-system deployment.apps/coredns 1/1 1 1 38m

kube-system deployment.apps/hostpath-provisioner 1/1 1 1 37m

metallb-system deployment.apps/controller 1/1 1 1 36m

controller-microk8s-localhost deployment.apps/modeloperator 1/1 1 1 21m

openstack deployment.apps/modeloperator 1/1 1 1 21m

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/calico-kube-controllers-d8b9b6478 1 1 1 39m

kube-system replicaset.apps/coredns-d489fb88 1 1 1 38m

kube-system replicaset.apps/hostpath-provisioner-766849dd9d 1 1 1 36m

metallb-system replicaset.apps/controller-56c4696b5 1 1 1 36m

controller-microk8s-localhost replicaset.apps/modeloperator-8449b84544 1 1 1 21m

openstack replicaset.apps/modeloperator-c59b89747 1 1 1 21m

NAMESPACE NAME READY AGE

controller-microk8s-localhost statefulset.apps/controller 1/1 22m

openstack statefulset.apps/certificate-authority 1/1 20m

openstack statefulset.apps/mysql 1/1 20m

openstack statefulset.apps/glance 1/1 20m

openstack statefulset.apps/neutron 1/1 20m

openstack statefulset.apps/ovn-relay 1/1 20m

openstack statefulset.apps/rabbitmq 1/1 19m

openstack statefulset.apps/horizon 1/1 19m

openstack statefulset.apps/keystone 1/1 19m

openstack statefulset.apps/traefik 1/1 20m

openstack statefulset.apps/ovn-central 1/1 19m

openstack statefulset.apps/placement 1/1 20m

openstack statefulset.apps/nova 1/1 18m생성 확인 완료 후 OpenStack 클라우드의 초기 설정을 진행 해야 한다. 현재 MicroStack을 사용 하기 때문에 아래와 같이 초기 설정을 진행 한다

microstack configure -o demo_openrc

Initializing Terraform from provider mirror ... done

Username to use for access to OpenStack (demo):

Password to use for access to OpenStack (ultAzOz8ti8Z):

Network range to use for project network (192.168.122.0/24): # OpenStack 내부 네트워크 지정

Setup security group rules for SSH and ICMP ingress [y/n] (y):

Local or remote access to VMs [local/remote] (local): remote # remote을 해야만 Host PC와 SSH 접속이 유지되고 OpenStack Dashboard 세션 연결 가능하다

CIDR of OpenStack external network - arbitrary but must not be in use (10.20.20.0/24): 192.168.1.0/24 # OpenStack 외부 네트워크 지정

Start of IP allocation range for external network (192.168.1.2): 192.168.1.3

End of IP allocation range for external network (192.168.1.254): 192.168.1.70

Network type for access to external network [flat/vlan] (flat):

Configuring OpenStack cloud for use ... done

Writing openrc to demo_openrc ... done

Generating openrc for cloud usage ... done

? Updating hypervisor external network configuration ... 초기 설정 완료 후 아래 명령어를 통해 admin 계정을 생성 할 수 있다.

microstack openrc > admin_openrc웹 UI를 접속 하기 위해 OpenStack Dashboard(horizon)이 어떤 IP를 부여 받았는지 확인 해야 한다.

juju status -m openstack 2>/dev/null | grep horizon | head -n 1 | awk '{print $9}'

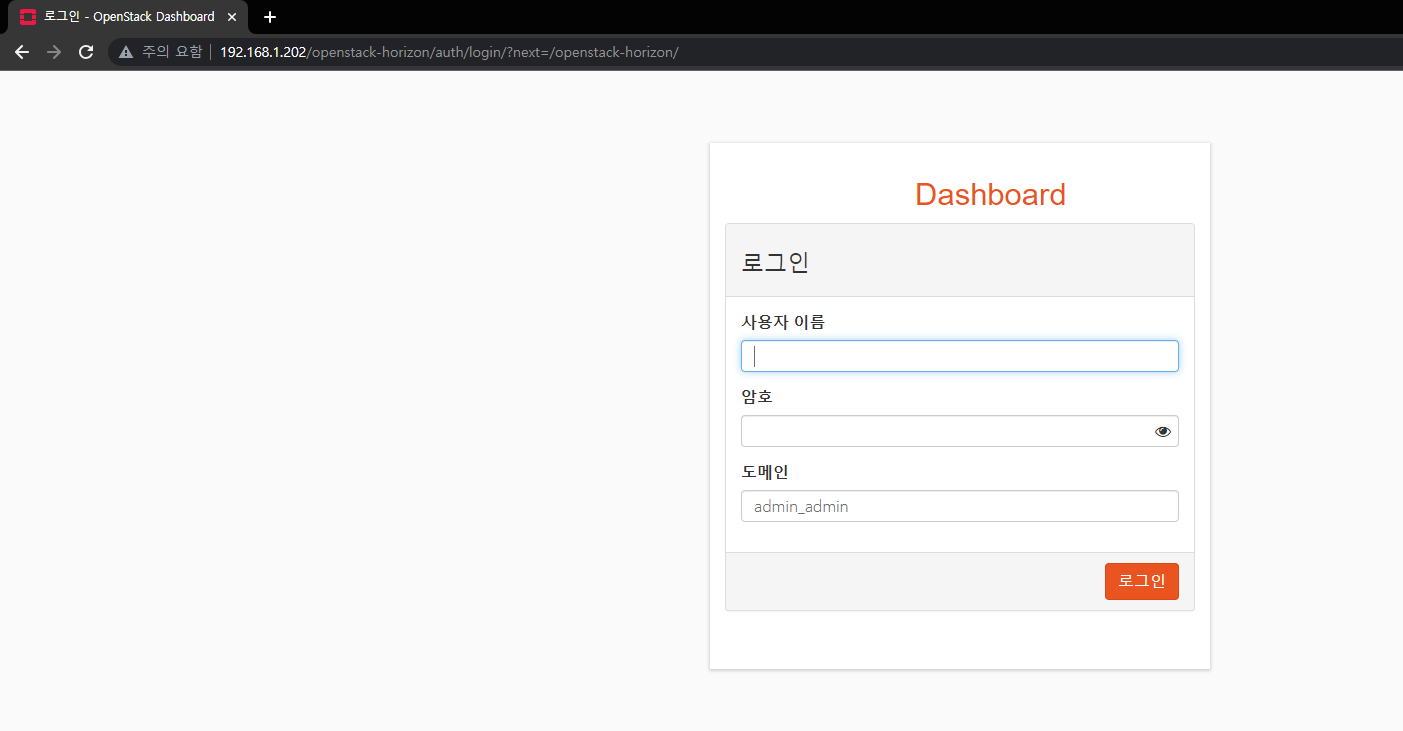

http://192.168.1.202:80/openstack-horizon바로 접속을 해보면:

demo 유저로 로그인을 하기 위해 demo 유저의 Credentials을 확인 해야 한다. 현재 디렉토리의 저장된 demo_openrc 파일을 열어본다.

ls

admin_openrc config demo_openrc snap

cat demo_openrc

export OS_AUTH_URL=http://192.168.1.202:80/openstack-keystone

export OS_USERNAME=demo

export OS_PASSWORD=MqlcF4f4t3sp

export OS_USER_DOMAIN_NAME=users

export OS_PROJECT_DOMAIN_NAME=users

export OS_PROJECT_NAME=user

export OS_AUTH_VERSION=3

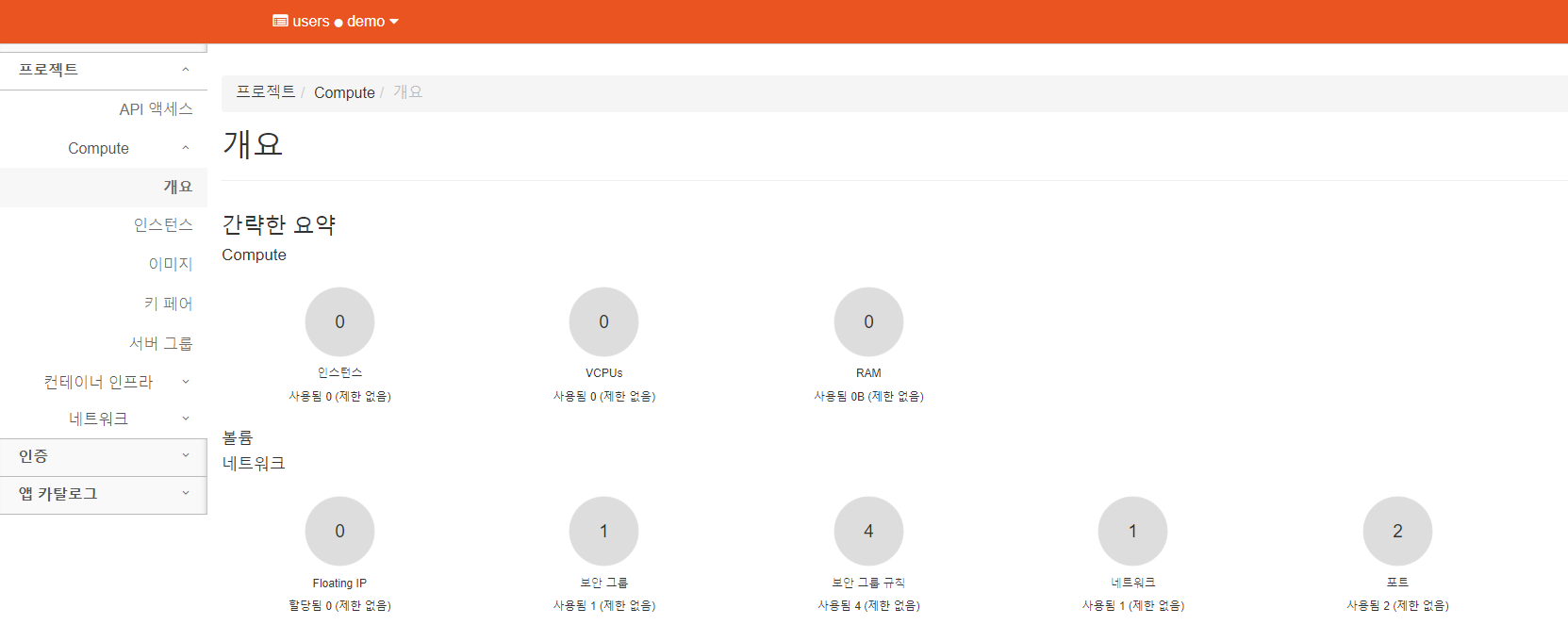

export OS_IDENTITY_API_VERSION=3위에 나와있는 정보로 Dashboard을 demo 유저로 로그인 해본다.

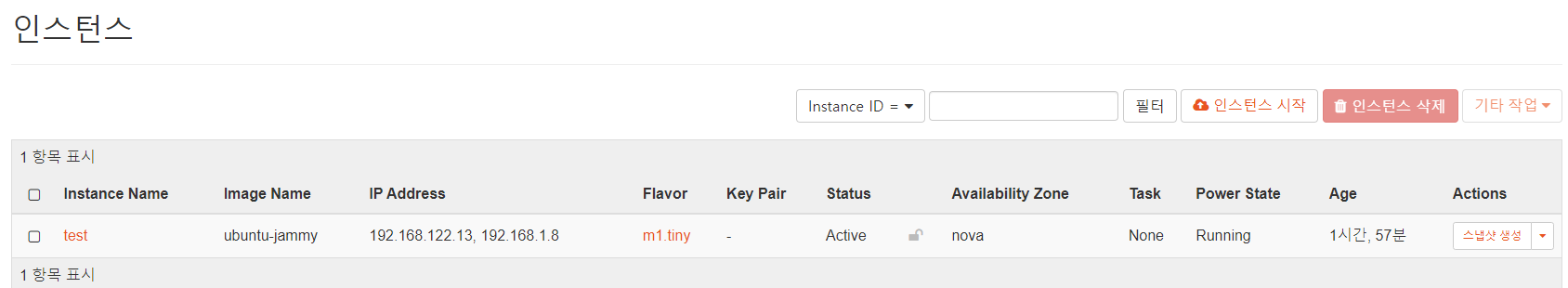

테스트용 가상 머신을 생성 해 보았다.

이 테스트 머신과 통신을 하려면 초반에 확인 했던 OpenStack용 ens34 네트워크 인터페이스를 활성화 시켜준다. 추가로 네트워크 인터페이스가 Router의 External 인터페이스에 연결 되어 있는지 확인 한다

# ens34 인터페이스 UP 상태로 전환

sudo ip link set up dev ens34

# br-ex에 ens34 인터페이스가 추가 되어 있는 것을 확인

sudo openstack-hypervisor.ovs-vsctl show

35c97266-ccfd-40a7-afb2-7672e7aa6158

Bridge br-ex

datapath_type: system

Port ens34

Interface ens34

Port patch-provnet-20661610-1756-402f-8df8-4e027e639e2e-to-br-int

Interface patch-provnet-20661610-1756-402f-8df8-4e027e639e2e-to-br-int

type: patch

options: {peer=patch-br-int-to-provnet-20661610-1756-402f-8df8-4e027e639e2e}

Port br-ex

Interface br-ex

type: internal

Bridge br-int

fail_mode: secure

datapath_type: system

Port patch-br-int-to-provnet-20661610-1756-402f-8df8-4e027e639e2e

Interface patch-br-int-to-provnet-20661610-1756-402f-8df8-4e027e639e2e

type: patch

options: {peer=patch-provnet-20661610-1756-402f-8df8-4e027e639e2e-to-br-int}

Port br-int

Interface br-int

type: internal

ovs_version: "3.1.0"

## 만약에 자동으로 추가가 안되어 있는 경우

sudo openstack-hypervisor.ovs-vsctl add-port br-ex ens34이 버전의 문제점은 Cinder 볼륨을 제공하지 않는 것이다. 그래서 인스턴스는 기본적인 Host PC의 백엔드를 디스크 공간으로 사용 하게 된다.